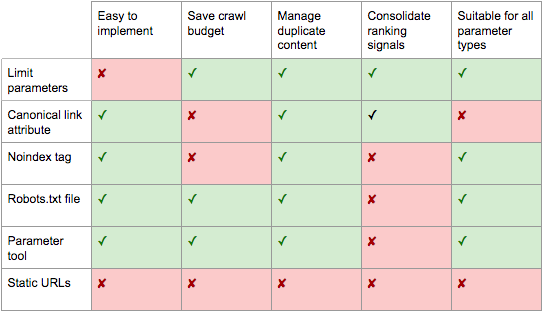

To optimize your crawl budget effectively, focus on managing URL parameters, eliminating duplicate content, and structuring your sitemap strategically:

-

Managing URL Parameters

- Use your robots.txt file to disallow crawling of URLs with unnecessary or session-based parameters (e.g.,

?color=,?sort=,?filter=,?add_to_wishlist=) to prevent crawlers from wasting resources on multiple URL variants of the same content. - Remove or redirect URLs with tracking or affiliate parameters (e.g.,

affiliateID,trackingID) to their canonical versions using 301 redirects or cookies to avoid duplicate content issues and consolidate crawl focus. - Keep URLs clean and canonical in your sitemap submissions to guide search engines toward the preferred versions of pages.

- Use your robots.txt file to disallow crawling of URLs with unnecessary or session-based parameters (e.g.,

-

Eliminating Duplicate Content

- Identify and remove or consolidate duplicate pages caused by URL parameters, session IDs, or automatically generated pages to prevent crawl budget waste and improve indexing quality.

- Use canonical tags to indicate the preferred version of a page when duplicates are unavoidable.

- Avoid indexing low-value or near-duplicate pages such as internal search results, tag pages, or thin content pages.

-

Optimizing Sitemap Structure

- Split large XML sitemaps into smaller, topic-focused sitemaps to help search engines crawl and index your site more efficiently.

- Ensure your sitemap only includes indexable, high-quality URLs and excludes broken, redirected, or non-indexable pages (3xx, 4xx, 5xx status codes).

- Submit sitemaps with canonical URLs to reinforce which pages should be prioritized for crawling and indexing.

Additional best practices include:

- Implementing a logical site architecture with a clear hierarchy and hub pages to guide crawlers to important content efficiently.

- Balancing internal linking to distribute crawl equity and prioritize key pages.

- Avoiding blocking resources essential for rendering, especially for JavaScript-heavy sites, to ensure proper crawling and indexing.

- Monitoring and fixing broken links, redirects, and slow-loading pages to maintain crawl efficiency.

These strategies collectively help ensure that search engines spend their crawl budget on your most valuable pages, improving overall SEO performance and site visibility.

WebSeoSG offers the highest quality website traffic services in Singapore. We provide a variety of traffic services for our clients, including website traffic, desktop traffic, mobile traffic, Google traffic, search traffic, eCommerce traffic, YouTube traffic, and TikTok traffic. Our website boasts a 100% customer satisfaction rate, so you can confidently purchase large amounts of SEO traffic online. For just 40 SGD per month, you can immediately increase website traffic, improve SEO performance, and boost sales!

Having trouble choosing a traffic package? Contact us, and our staff will assist you.

Free consultation