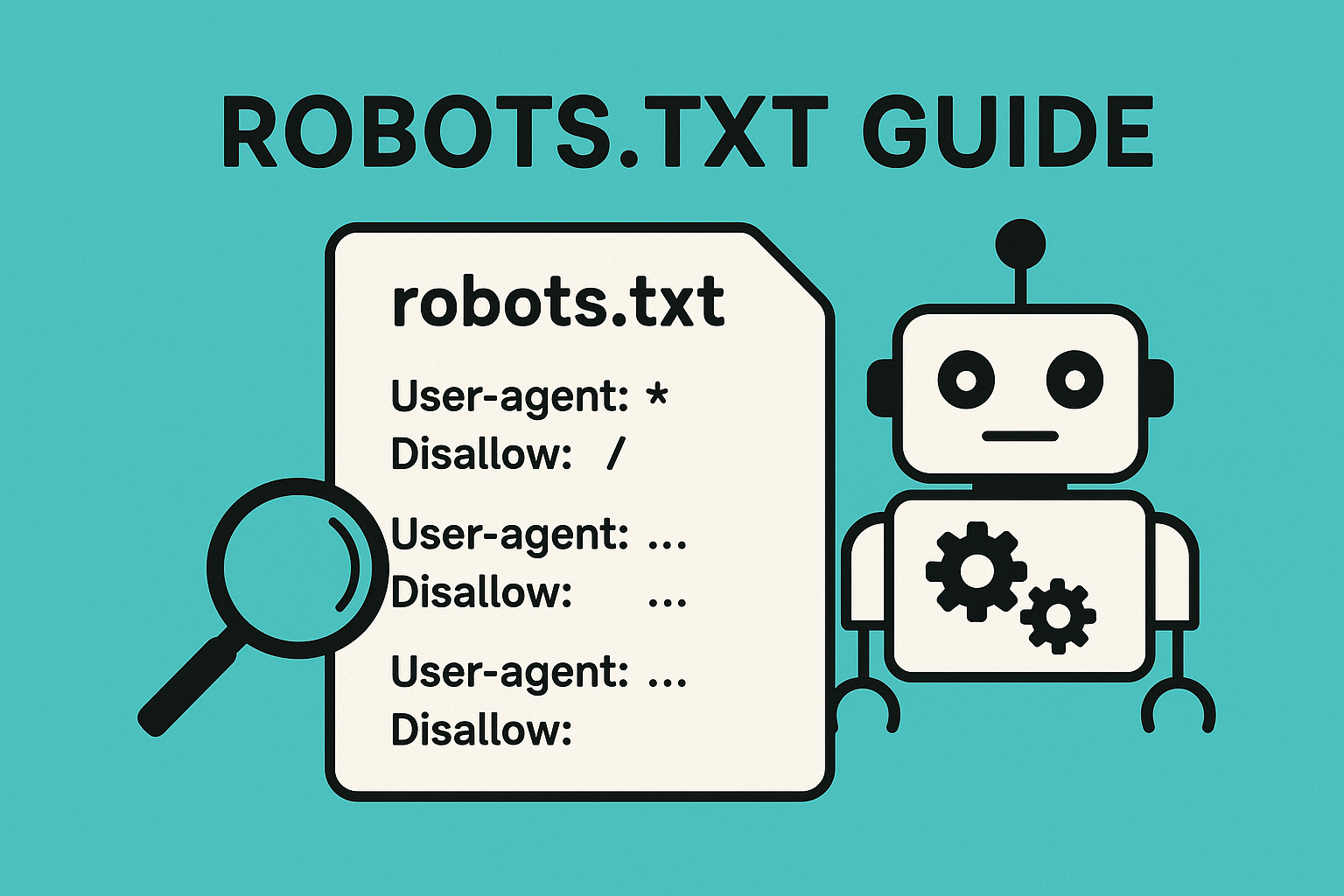

Robots.txt helps manage crawl budget and SEO efficiency by controlling which parts of a website search engine bots are allowed to crawl. This selective crawling ensures that search engines focus their limited crawl resources on the most important and valuable pages, improving indexing efficiency and overall SEO performance.

Key points on how robots.txt influences crawl budget and SEO:

-

Crawl Budget Definition: Crawl budget is the number of pages or URLs a search engine bot crawls on a site within a given timeframe. It depends on factors like site size, server responsiveness, site popularity, and freshness of content.

-

Directing Crawlers: By using robots.txt directives, webmasters can block bots from crawling low-value, duplicate, or resource-heavy pages (e.g., admin folders, staging environments, or URLs with excessive query parameters). This prevents bots from wasting crawl budget on unimportant content and prioritizes high-value pages for crawling and indexing.

-

Preventing Server Overload: Restricting access to resource-intensive sections reduces server load, allowing faster response times for important pages. This can improve crawl rate and frequency, helping fresh content get indexed more quickly.

-

Avoiding Crawl Traps: Blocking URLs with many query parameters or infinite URL variations prevents search engines from getting stuck crawling redundant or near-duplicate pages, preserving crawl budget for meaningful content.

-

Limitations: Robots.txt only controls crawling, not indexing. Pages blocked by robots.txt can still appear in search results if linked externally. For removing pages from search results, other methods like the noindex directive or page removal are necessary.

-

Mobile-First Considerations: Efficient crawl budget management also involves ensuring mobile versions of pages are optimized, as Google uses mobile-first indexing. Poor mobile performance can reduce crawl efficiency.

In summary, a well-configured robots.txt file guides search engine bots to crawl and index the most important content efficiently, optimizes server resources, and helps improve SEO by making the best use of the crawl budget.

WebSeoSG offers the highest quality website traffic services in Singapore. We provide a variety of traffic services for our clients, including website traffic, desktop traffic, mobile traffic, Google traffic, search traffic, eCommerce traffic, YouTube traffic, and TikTok traffic. Our website boasts a 100% customer satisfaction rate, so you can confidently purchase large amounts of SEO traffic online. For just 40 SGD per month, you can immediately increase website traffic, improve SEO performance, and boost sales!

Having trouble choosing a traffic package? Contact us, and our staff will assist you.

Free consultation