Anthropic’s Ethical Approach: Constitutional AI and Safety in Claude AI

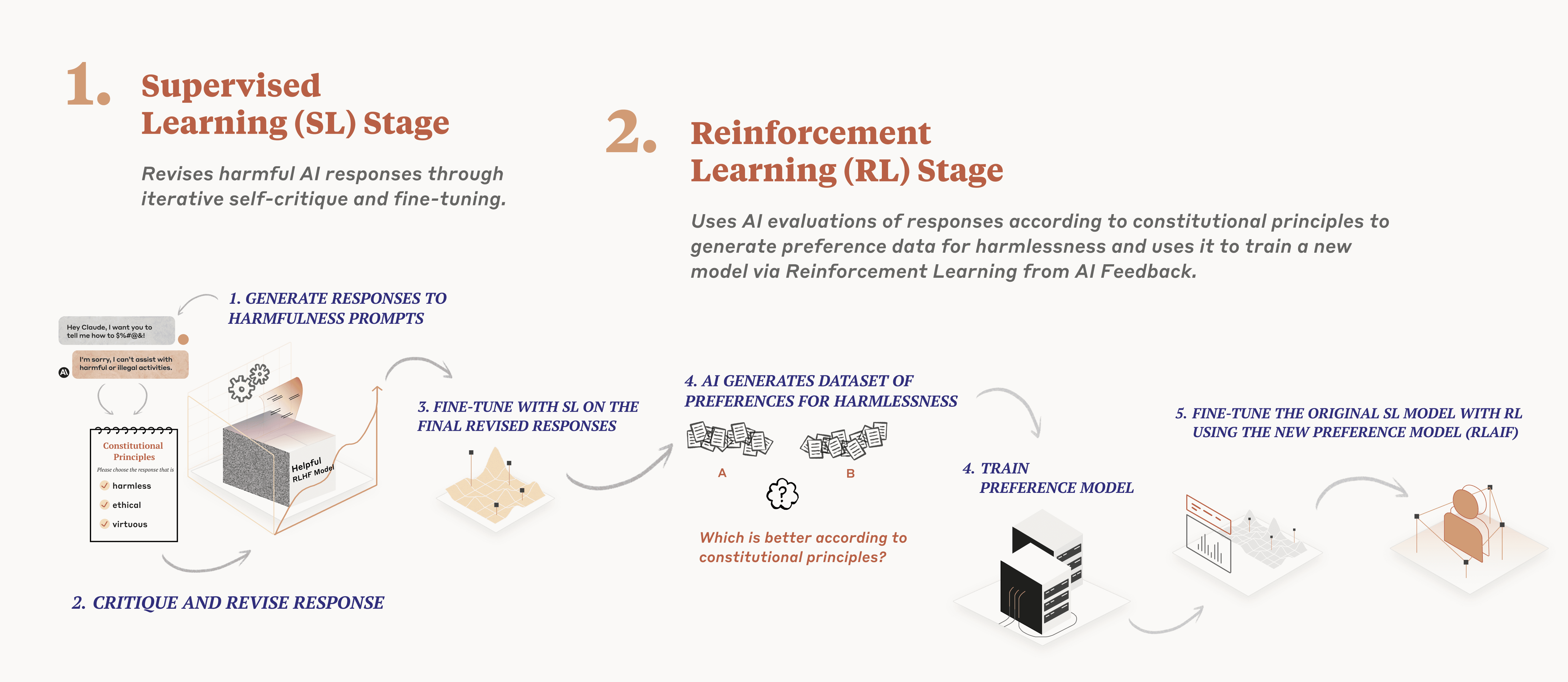

Anthropic’s approach to AI ethics and safety is structured around Constitutional AI (CAI), a framework designed to align AI systems like Claude with human values, ethical principles, and safety standards. This method distinguishes Anthropic from competitors by embedding a “constitution” of rules and norms directly into the model’s training and operation.

Constitutional AI: Principles and Implementation

- Ethical Blueprint: Constitutional AI uses a set of predefined rules—akin to a constitution—based on sources such as the UN Declaration of Human Rights. These rules guide Claude’s behaviour, ensuring outputs are not only accurate but also ethically sound and aligned with societal values.

- AI Feedback Loop: Instead of relying solely on human feedback, Claude uses AI systems to evaluate its own outputs against these constitutional principles. This allows for scalable supervision, reduces biases from human annotators, and enables faster iteration without constant manual oversight.

- Transparency and Explainability: When Claude encounters requests that conflict with its constitution, it is trained to explain its objections, promoting transparency and user trust.

Multi-Layered Safety Strategy

Anthropic employs a defence-in-depth safety strategy, combining technical, policy, and operational measures to mitigate risks.

- Usage Policy: Clear guidelines define acceptable and unacceptable uses of Claude, covering areas like election integrity, child safety, and sensitive domains such as healthcare and finance.

- Unified Harm Framework: Potential harms—physical, psychological, economic, societal—are systematically assessed using this framework to inform decision-making.

- External Expertise: Policy Vulnerability Tests involve external specialists (e.g., in terrorism, child safety) who attempt to “break” the model to identify weaknesses, leading to iterative improvements.

- Constitutional Classifiers: Additional AI systems scan prompts and responses for dangerous content, such as bioweapon-related queries, with stricter measures in newer models like Claude Opus 4.

- Jailbreak Prevention: Anthropic monitors for attempts to bypass safety controls (“jailbreaks”), offboards repeat offenders, and runs a bounty program to discover and patch universal jailbreaks.

User Safety Features

- Conversation Termination: Claude can exit conversations that become persistently abusive or harmful, notifying the user and explaining its decision. This feature is reserved for extreme cases, such as requests for illegal content or exploitation.

- Detection and Filtering: Automated systems flag and block harmful content based on the Usage Policy, with safety filters applied to both prompts and responses.

- Model Well-being: Anthropic emphasises that these measures protect both users and the integrity of the AI system itself, though the company does not claim sentience for Claude or other LLMs.

Enterprise and Societal Impact

Claude’s safety-first design makes it suitable for sensitive applications—healthcare diagnostics, financial advisory—where harmful or biased outputs could have serious consequences. The ongoing development and community feedback ensure that Claude remains a leading example of how advanced AI can be both powerful and aligned with human values.

Summary Table: Key Elements of Anthropic’s Ethical Approach

| Element | Description | Purpose |

|---|---|---|

| Constitutional AI | Rules-based framework guiding AI behaviour | Ethical alignment, transparency |

| Usage Policy | Clear guidelines on acceptable use | Prevent misuse, set boundaries |

| Unified Harm Framework | Systematic assessment of potential harms | Informed risk management |

| Policy Vulnerability Tests | External experts test model robustness | Identify and fix weaknesses |

| Constitutional Classifiers | AI systems detect dangerous content | Block harmful queries |

| Jailbreak Prevention | Monitoring, offboarding, bounty programs | Maintain safety controls |

| Conversation Termination | Model exits abusive/harmful chats | Protect users and model |

| Detection & Filtering | Automated systems flag/block harmful content | Enforce safety standards |

Conclusion

Anthropic’s Constitutional AI and multi-layered safety measures represent a comprehensive, principled approach to developing AI systems that are helpful, harmless, and honest. By embedding ethical norms and robust safeguards into Claude, Anthropic aims to set a standard for responsible AI that balances capability with safety and societal benefit.

WebSeoSG offers the highest quality website traffic services in Singapore. We provide a variety of traffic services for our clients, including website traffic, desktop traffic, mobile traffic, Google traffic, search traffic, eCommerce traffic, YouTube traffic, and TikTok traffic. Our website boasts a 100% customer satisfaction rate, so you can confidently purchase large amounts of SEO traffic online. For just 40 SGD per month, you can immediately increase website traffic, improve SEO performance, and boost sales!

Having trouble choosing a traffic package? Contact us, and our staff will assist you.

Free consultation