For large websites, advanced robots.txt techniques focus on optimising crawl budget, controlling bot access precisely, and improving SEO efficiency. Key strategies include:

-

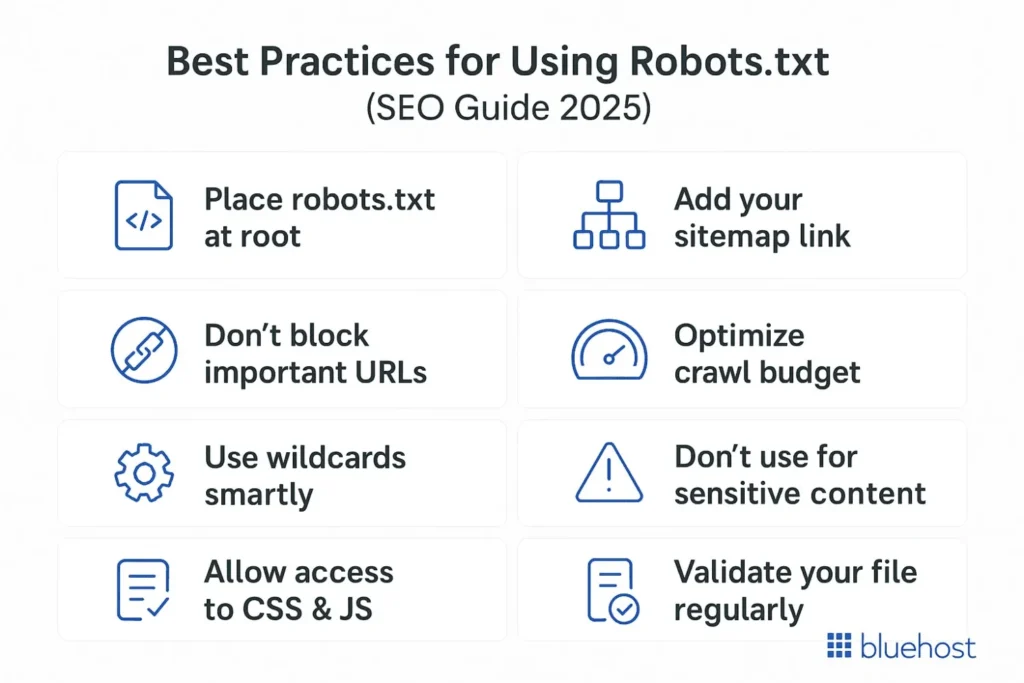

Crawl Budget Optimization: Use robots.txt to block low-value or duplicate content sections such as tag pages, archive pages, login pages, or internal search results. This helps search engines focus crawling on high-priority pages like product or service pages, improving indexing efficiency and ranking potential.

-

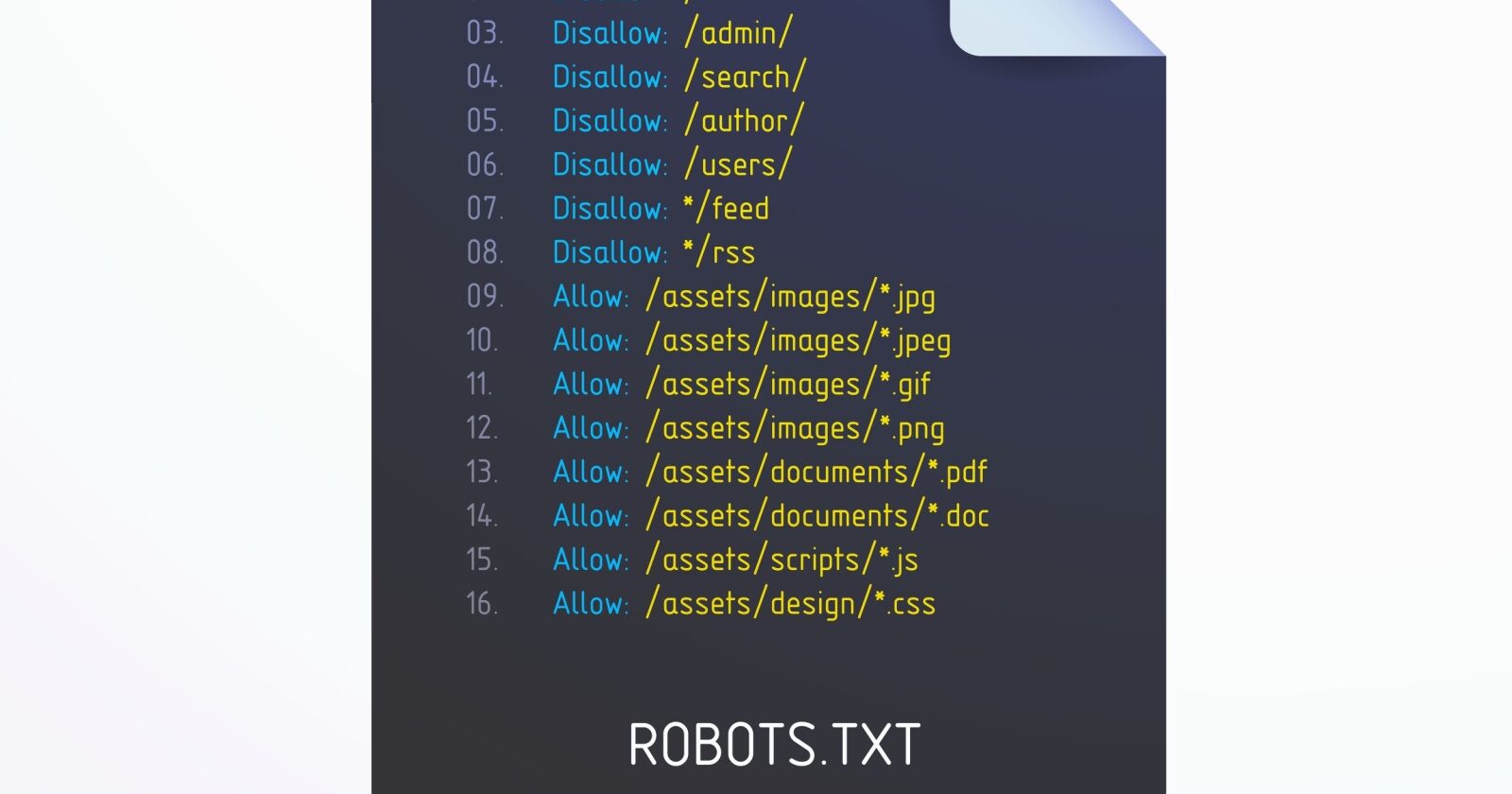

Combining Disallow and Allow Rules: Instead of blanket blocking, use the Disallow directive to restrict entire directories but pair it with Allow rules to permit access to specific valuable files within those directories. This nuanced control ensures important content remains crawlable while less important parts are blocked.

-

User-Agent and IP-Based Blocking: Tailor robots.txt to disallow specific user-agents (bots) or IP address ranges, especially to mitigate server load caused by malicious or unwanted bots. This selective blocking protects server resources and prioritises legitimate crawlers.

-

Sitemap Inclusion: Always include a Sitemap directive in robots.txt to guide search engines efficiently to your sitemap location. This accelerates discovery and indexing of your site’s pages, which is critical for large sites with many URLs.

-

Avoid Blocking Noindex Pages: Do not block pages with a noindex meta tag in robots.txt, as search engines need access to these pages to see the noindex directive and exclude them from search results properly.

-

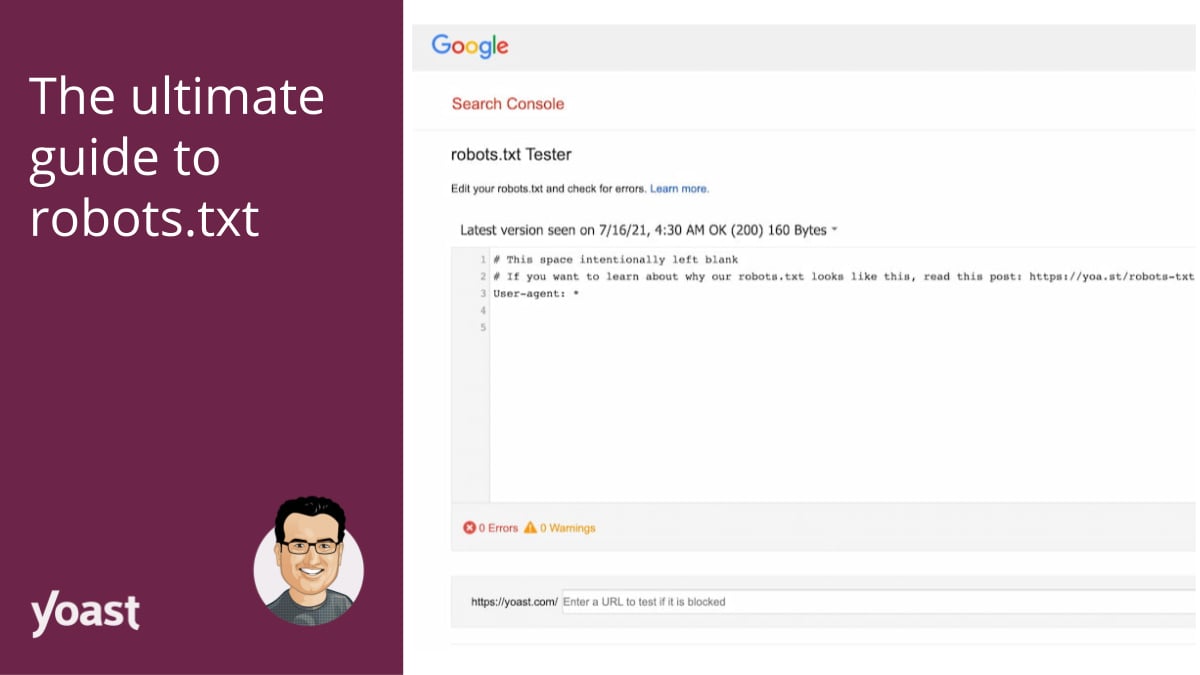

Regular Testing and Validation: Use tools like Google Search Console to test robots.txt syntax and effectiveness regularly. This prevents accidental blocking of important pages and ensures the file remains aligned with SEO goals.

-

Keep Robots.txt Simple and Maintainable: Avoid overly complex or overlapping rules. Clear, straightforward directives reduce errors and make it easier for teams to manage and update the file as the website evolves.

-

Security Note: Robots.txt is not a security tool. Do not rely on it to protect sensitive content; instead, use noindex meta tags or authentication methods to restrict access while allowing crawlers to index appropriate content.

These techniques collectively help large websites manage crawling efficiently, improve SEO performance, and maintain control over which parts of the site are accessible to different bots.

WebSeoSG offers the highest quality website traffic services in Singapore. We provide a variety of traffic services for our clients, including website traffic, desktop traffic, mobile traffic, Google traffic, search traffic, eCommerce traffic, YouTube traffic, and TikTok traffic. Our website boasts a 100% customer satisfaction rate, so you can confidently purchase large amounts of SEO traffic online. For just 40 SGD per month, you can immediately increase website traffic, improve SEO performance, and boost sales!

Having trouble choosing a traffic package? Contact us, and our staff will assist you.

Free consultation