To create and configure a robots.txt file for your website, follow these key steps:

-

Create the robots.txt file

Use a plain text editor (e.g., Notepad, TextEdit) to create a file named robots.txt. Save it with UTF-8 encoding to ensure compatibility with search engines. -

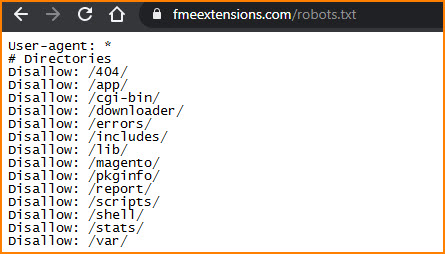

Add rules (directives) for web crawlers

The file contains groups of rules starting with a User-agent line specifying which crawler the rules apply to. Common directives include:- Disallow: blocks crawlers from accessing specific pages or directories.

- Allow: permits access to specific pages within a disallowed directory.

- Sitemap: (optional but recommended) provides the URL of your sitemap to help crawlers find and index your content efficiently.

- Comments can be added using

#to explain rules without affecting crawling.

-

Upload the robots.txt file to your website’s root directory

Place the file in the root folder of your website so it is accessible athttps://yourdomain.com/robots.txt. This location is essential for search engines to find and read it. -

Test your robots.txt file

Verify the file is accessible by visiting its URL in a browser’s incognito mode. Use tools like Google Search Console’s robots.txt Tester to check for syntax errors and ensure your directives work as intended.

Example of a simple robots.txt file:

User-agent: *

Disallow: /private/

Allow: /private/public-info.html

Sitemap: https://yourdomain.com/sitemap.xml

- This blocks all crawlers from the

/private/directory except for thepublic-info.htmlpage. - It also points crawlers to the sitemap location.

Additional tips:

- Only have one robots.txt file per website.

- Rules are case-sensitive and processed top to bottom; the first matching rule applies.

- If you cannot access your root directory, consider alternative methods like meta tags for blocking pages.

- Content management systems like WordPress and Shopify often provide interfaces to edit robots.txt directly.

This approach ensures search engines crawl and index your site effectively while respecting your preferences on which pages to include or exclude.

WebSeoSG offers the highest quality website traffic services in Singapore. We provide a variety of traffic services for our clients, including website traffic, desktop traffic, mobile traffic, Google traffic, search traffic, eCommerce traffic, YouTube traffic, and TikTok traffic. Our website boasts a 100% customer satisfaction rate, so you can confidently purchase large amounts of SEO traffic online. For just 40 SGD per month, you can immediately increase website traffic, improve SEO performance, and boost sales!

Having trouble choosing a traffic package? Contact us, and our staff will assist you.

Free consultation