To test and validate your robots.txt file effectively, follow these step-by-step instructions:

-

Prepare Your robots.txt File

Ensure your robots.txt file is correctly formatted, named "robots.txt," encoded in UTF-8, and placed in your website’s root directory (e.g., https://yoursite.com/robots.txt). -

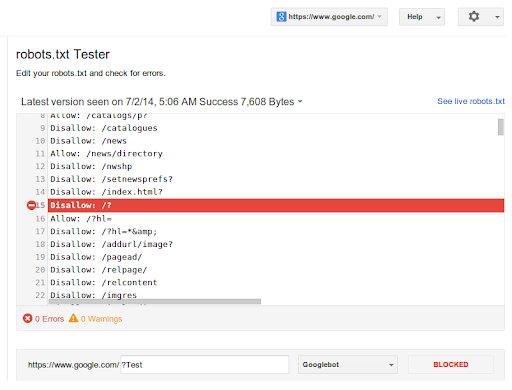

Use Google Search Console’s Robots.txt Tester

- Sign in to Google Search Console and select your website property.

- Navigate to Legacy tools and reports > robots.txt Tester.

- The tool will load your current robots.txt file. You can edit it directly here to add or modify rules.

- Enter specific URLs in the test field to check if they are allowed or blocked by your rules.

- Review any blocking lines highlighted and adjust your rules accordingly. Repeat testing until the file behaves as expected.

-

Test with Bing Webmaster Tools

- Log into Bing Webmaster Tools and select your site.

- Go to Configure My Site > Robots.txt Tester.

- Test URLs against your robots.txt rules to identify any issues.

-

Validate Syntax with Online Tools

Use online validators such as Google’s Robots Testing Tool or Ryte’s Robots.txt Validator to check for syntax errors or misconfigurations in your Disallow and Allow commands. -

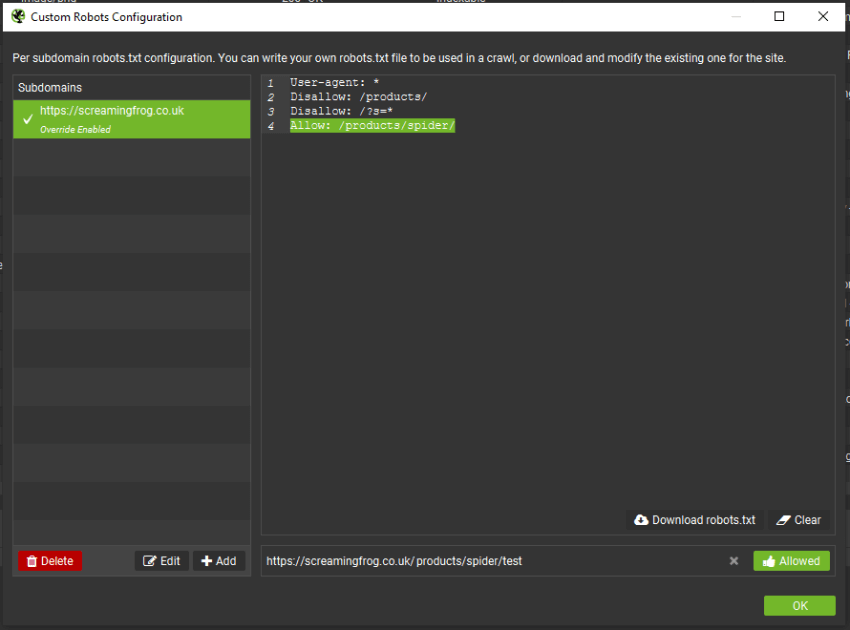

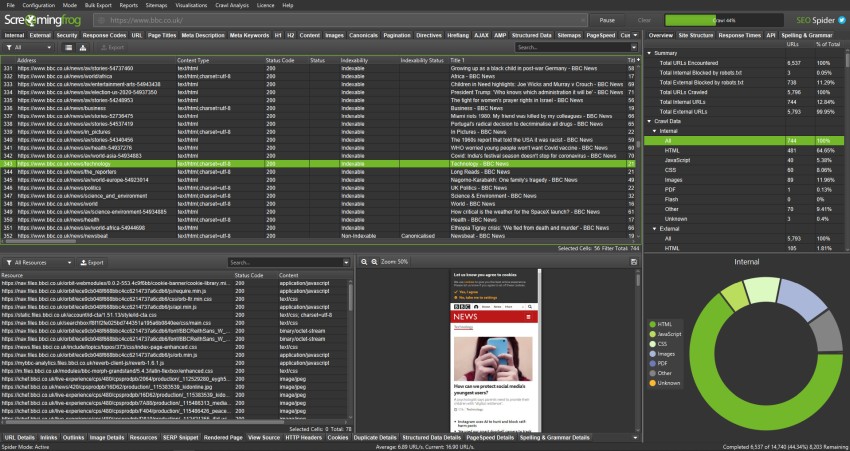

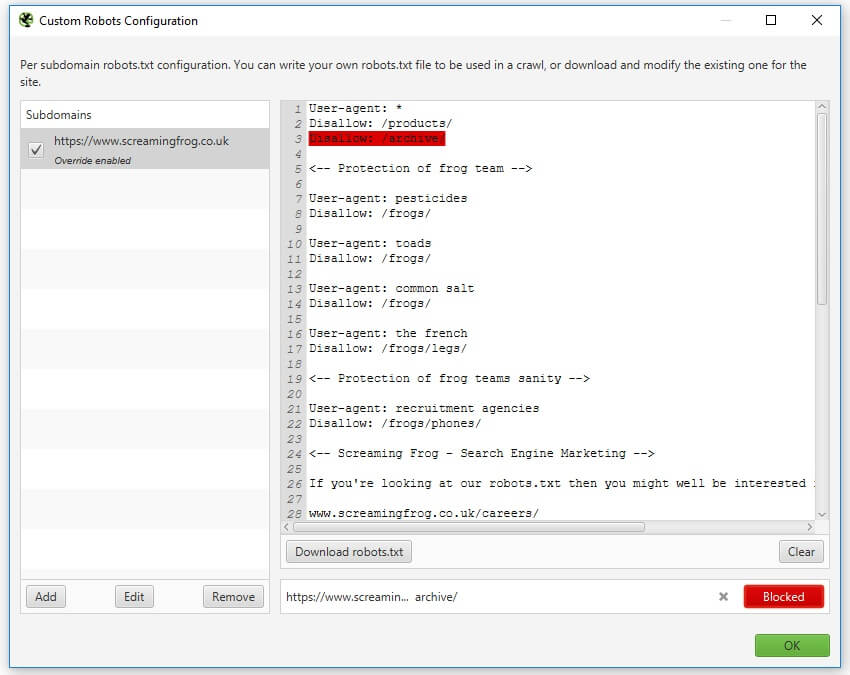

Test at Scale Using SEO Spider Tools

For large sites or complex robots.txt files, use tools like Screaming Frog SEO Spider:- Download and open the SEO Spider tool.

- Crawl your site or upload a list of URLs.

- Use the custom robots.txt feature to test and validate which URLs are blocked or allowed by your robots.txt directives.

- This helps identify unintended blocks or allows before deploying changes live.

-

Local Testing (Optional)

Before deploying, you can test your robots.txt file locally by simulating crawler behavior using browser extensions or local testing applications to preview how bots interpret your rules. -

Deploy and Monitor

After thorough testing, upload your validated robots.txt file to your website root. Continuously monitor your site’s indexing status via Google Search Console and Bing Webmaster Tools to catch any unexpected crawling issues.

By following these steps, you can confidently test and validate your robots.txt file to ensure search engines crawl and index your site as intended, avoiding SEO pitfalls caused by misconfigured rules.

WebSeoSG offers the highest quality website traffic services in Singapore. We provide a variety of traffic services for our clients, including website traffic, desktop traffic, mobile traffic, Google traffic, search traffic, eCommerce traffic, YouTube traffic, and TikTok traffic. Our website boasts a 100% customer satisfaction rate, so you can confidently purchase large amounts of SEO traffic online. For just 40 SGD per month, you can immediately increase website traffic, improve SEO performance, and boost sales!

Having trouble choosing a traffic package? Contact us, and our staff will assist you.

Free consultation