To troubleshoot robots.txt issues in Google Search Console, particularly the "Blocked by robots.txt" error, follow these key steps:

-

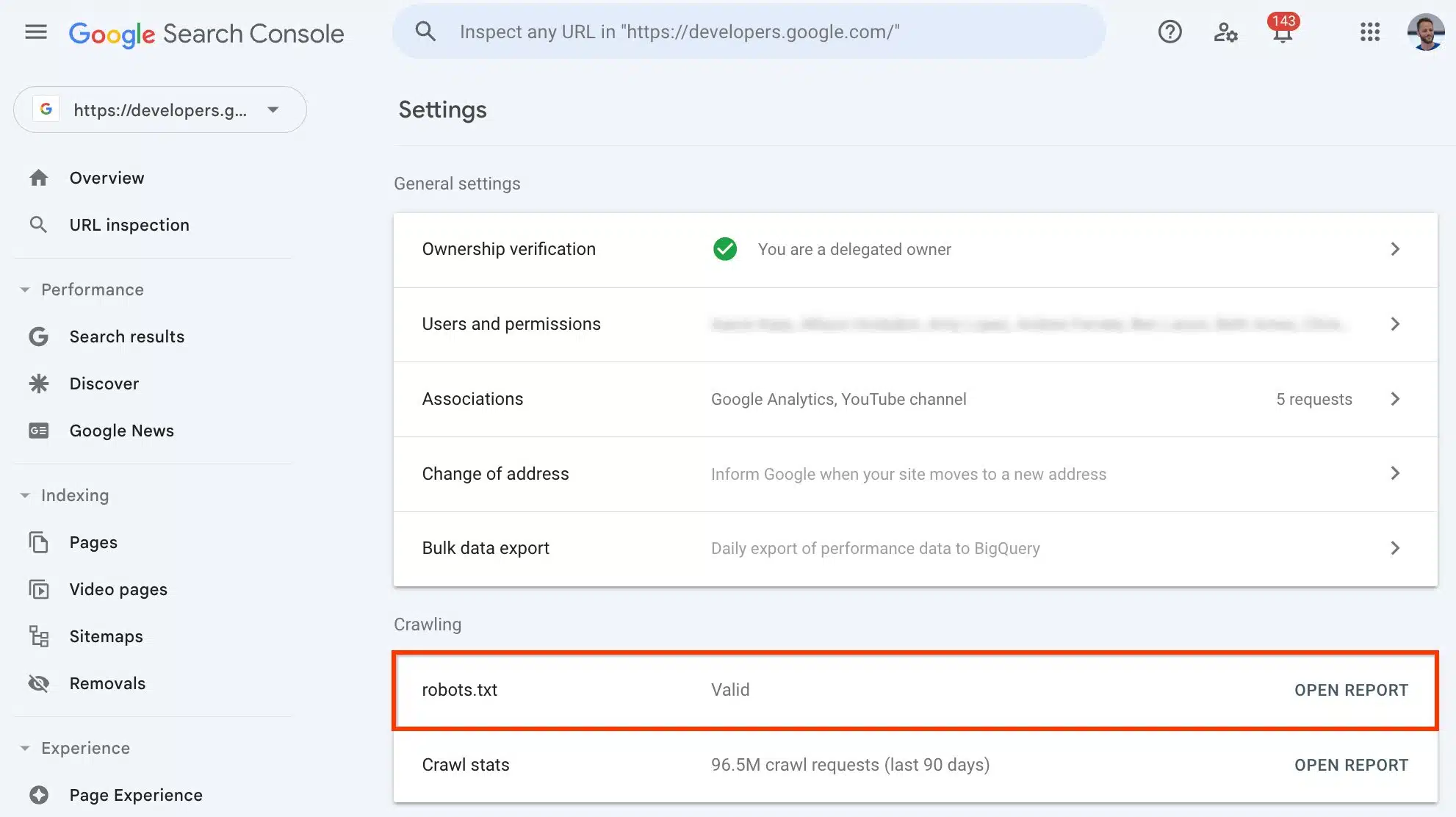

Identify the error in Google Search Console:

- Log in to Google Search Console.

- Navigate to Indexing > Pages.

- Scroll to the Why pages aren’t indexed section.

- Click on Blocked by robots.txt to see which URLs are affected.

-

Understand the cause:

- The error usually means your robots.txt file contains a Disallow directive blocking Googlebot from crawling certain URLs.

- Common causes include:

- Incorrect or overly broad Disallow rules blocking important pages or directories.

- Legacy or outdated rules no longer relevant.

- Manual syntax errors (e.g., missing slashes).

- SEO plugins or tools automatically generating restrictive rules without your knowledge.

-

Use tools to diagnose:

- Use Google Search Console’s robots.txt Tester to check if specific URLs are blocked.

- Access your robots.txt file directly by visiting

yourdomain.com/robots.txtto review its contents. - Look for lines like:

which block all crawling.User-agent: * Disallow: /

-

Decide if the pages should be indexed:

- If the blocked pages should be indexed, remove or modify the Disallow directives in robots.txt that block those URLs.

- If the pages are intentionally blocked and should not be indexed, you may leave the robots.txt as is.

- Note: Blocking crawling via robots.txt does not guarantee exclusion from Google’s index if other pages link to those URLs. To fully prevent indexing, use a noindex meta tag or HTTP header in addition to robots.txt blocking.

-

Fix the robots.txt file:

- Edit the robots.txt file to remove or adjust Disallow rules that block important content.

- Correct any syntax errors.

- If using SEO plugins, check their settings to ensure they are not adding unwanted rules.

- After updating, re-upload the file to your server.

-

Request re-crawling:

- After fixing robots.txt, use Google Search Console’s URL Inspection Tool to request re-crawling of affected URLs.

- Monitor the status in the Page Indexing report to confirm the issue is resolved.

-

When to ignore the error:

- If the blocked URLs are intentionally excluded from crawling and indexing, the error can be safely ignored.

- Google will continue to respect the robots.txt directives and not index those pages.

Following these steps will help you effectively diagnose and resolve robots.txt-related indexing issues reported in Google Search Console.

WebSeoSG offers the highest quality website traffic services in Singapore. We provide a variety of traffic services for our clients, including website traffic, desktop traffic, mobile traffic, Google traffic, search traffic, eCommerce traffic, YouTube traffic, and TikTok traffic. Our website boasts a 100% customer satisfaction rate, so you can confidently purchase large amounts of SEO traffic online. For just 40 SGD per month, you can immediately increase website traffic, improve SEO performance, and boost sales!

Having trouble choosing a traffic package? Contact us, and our staff will assist you.

Free consultation