Robots.txt and LLMs.txt serve distinct but complementary roles in managing how automated systems interact with website content.

-

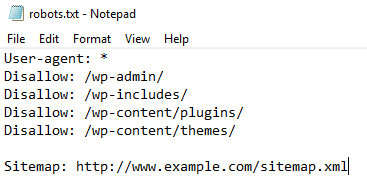

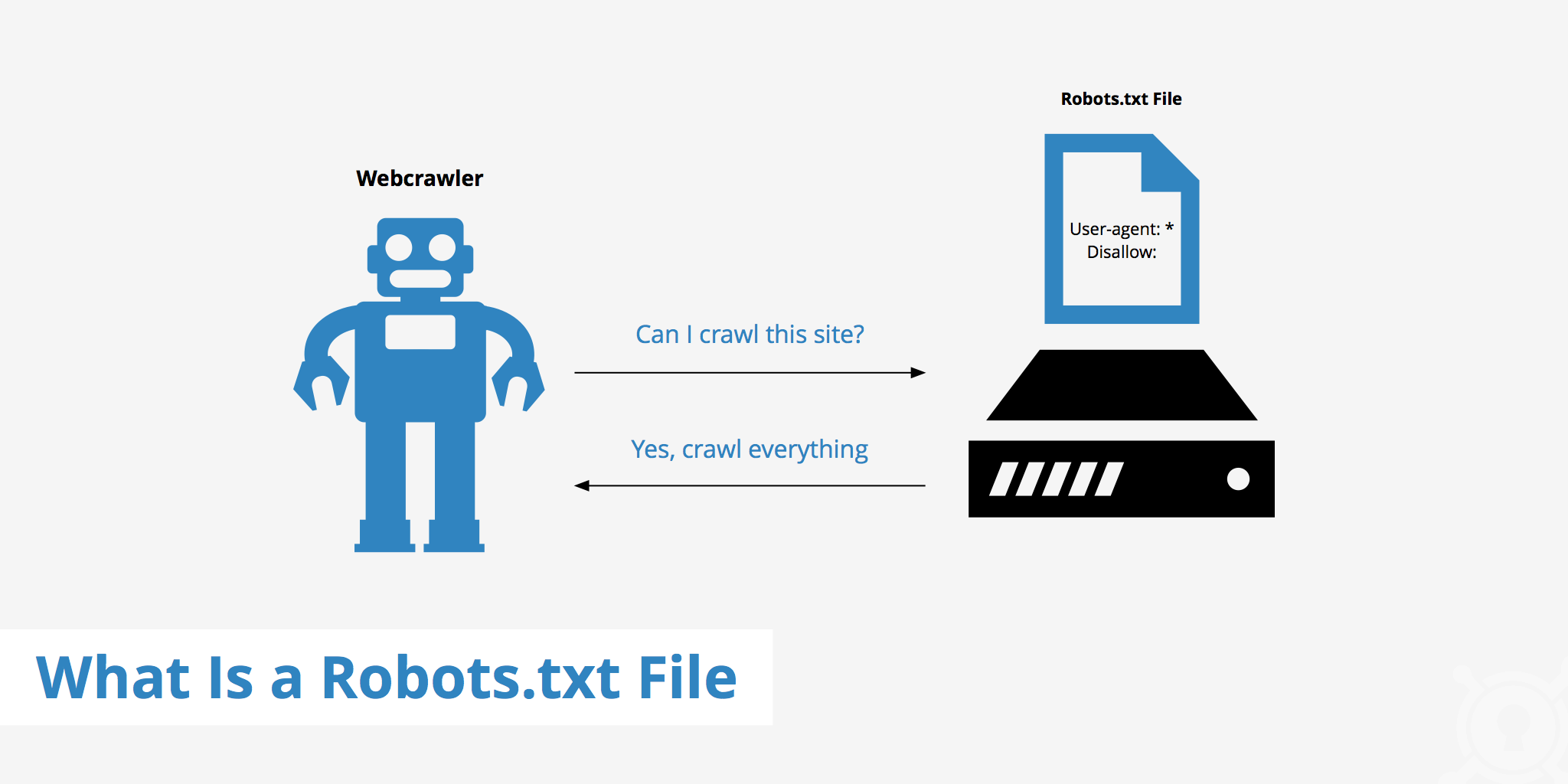

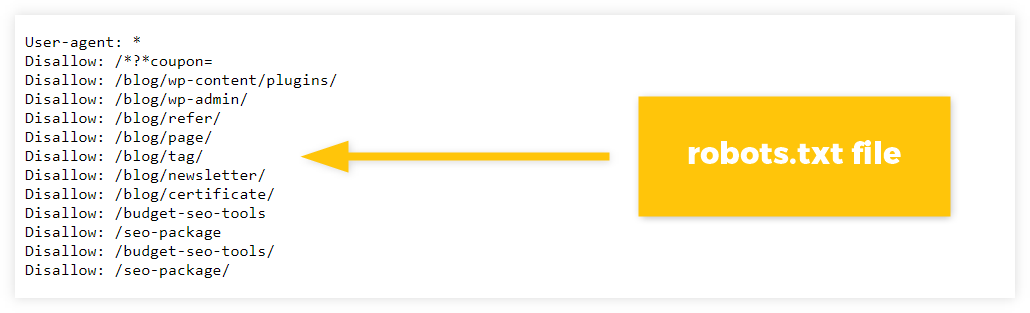

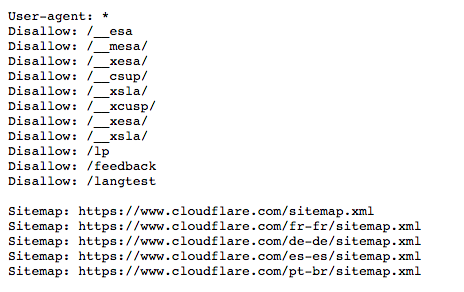

Robots.txt is a long-established, simple text file placed at the root of a website that instructs traditional web crawlers (like Googlebot or Bingbot) on which parts of the site they are allowed or disallowed to crawl and index. Its primary function is access control—telling search engine bots what they can or cannot visit, helping manage crawl budgets and prevent indexing of sensitive or duplicate content.

-

LLMs.txt is an emerging, experimental standard designed specifically for AI-powered Large Language Models (LLMs) and chatbots such as ChatGPT, Google Bard, and others. Unlike robots.txt, it does not block or allow crawling but instead acts as a content curation tool. It provides structured metadata and guidance to AI systems about which content on the site is high-quality, suitable for AI ingestion, summarization, citation, or training. Essentially, it is a curated "treasure map" or specialized sitemap for AI models to help them find and use the best content efficiently.

Key differences include:

| Aspect | Robots.txt | LLMs.txt |

|---|---|---|

| Purpose | Control crawler access | Guide AI content usage and citation |

| Target Agents | Traditional web crawlers (Googlebot) | Large Language Models and AI chatbots |

| Location | Domain root (/robots.txt) | Domain root (/llms.txt) |

| Directive Type | Simple allow/disallow rules | Rich metadata describing content usage |

| Update Frequency | Rare structural changes | Regular content updates |

| Behavior | Binary (allow/block) | Contextual (describe/guide) |

| Adoption Status | Widely adopted and respected | Experimental, growing adoption |

In practice, robots.txt remains the first line of defense to control which bots can crawl your site, including AI bots, while llms.txt complements this by signalling to AI systems which content you want them to prioritise and how to handle it (e.g., attribution preferences).

For SEO and digital marketing, this means:

-

Use robots.txt to ensure you do not unintentionally block important AI crawlers like GPTBot or Anthropic’s Claude bot, allowing them to access your content if you want to be included in AI-generated answers.

-

Use llms.txt to curate and highlight your best AI-friendly content, improving how AI chatbots understand, summarise, and cite your site, which is increasingly important as AI-driven search shifts from keyword-based to intent-driven results.

In summary, robots.txt manages access for traditional crawlers, while llms.txt manages content curation for AI language models, making them complementary tools in the evolving landscape of web content management.

WebSeoSG offers the highest quality website traffic services in Singapore. We provide a variety of traffic services for our clients, including website traffic, desktop traffic, mobile traffic, Google traffic, search traffic, eCommerce traffic, YouTube traffic, and TikTok traffic. Our website boasts a 100% customer satisfaction rate, so you can confidently purchase large amounts of SEO traffic online. For just 40 SGD per month, you can immediately increase website traffic, improve SEO performance, and boost sales!

Having trouble choosing a traffic package? Contact us, and our staff will assist you.

Free consultation